“AWS EKS vs. Fargate – Unveiling the Differences”

In the ever-evolving landscape of cloud computing, the orchestration of containerized applications has become a pivotal aspect of modern development. In this blog post, we delve into the distinctions between two prominent services offered by Amazon Web Services (AWS): Elastic Kubernetes Service (EKS) and Fargate. As we unravel the nuances of traditional Kubernetes deployment and compare it with the managed solutions provided by AWS, we aim to guide you in making informed decisions based on your specific use cases and preferences.

Kubernetes

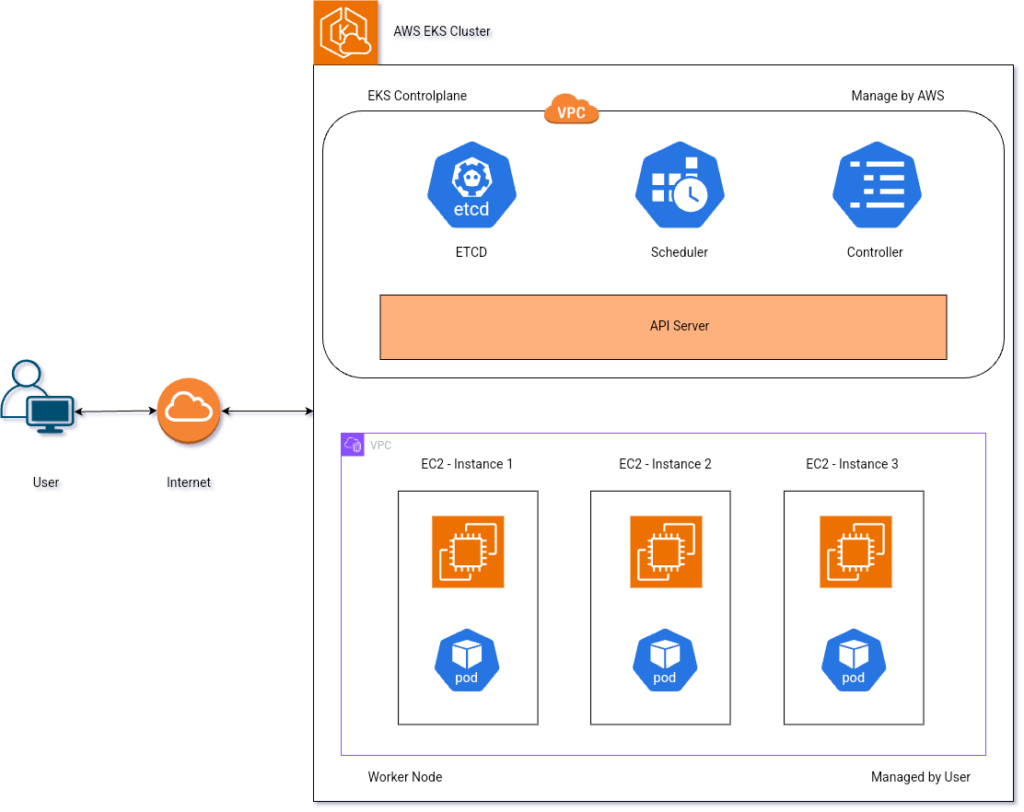

Amazon EKS (Elastic Kubernetes Service) is a managed Kubernetes service provided by Amazon Web Services (AWS). Kubernetes is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications. AWS EKS simplifies the process of running Kubernetes on AWS by handling many of the complex tasks associated with setting up, operating, and scaling a Kubernetes cluster.

What if you use traditional Kubernetes?

- Control Plane Setup:- In traditional Kubernetes, you are responsible for setting up and managing various components of the Kubernetes cluster on your own. You have to create the control plane (master node) and manage it yourself; you need to install all the control plane components such as etcd, kubelet, and manager manually.

- Node Provisioning:- You have to provision and manage the worker nodes that run your containerized applications. You have to manually deploy your containers on these nodes.

- Networking Configuration:– Configuring the network to enable communication between containers running on different nodes requires manual setup. You need to establish communication between the master node and worker nodes, as well as between different worker nodes.

- Security Configuration:- Implementing security measures, such as authentication and authorization, is necessary to ensure secure access to the cluster.

- Scaling and High Availability:- Managing the scaling of worker nodes based on application demand and setting up high-availability configurations are part of the responsibilities.

- Integration with Cloud Services:- If you are using a cloud provider like AWS, you would need to integrate Kubernetes with other AWS services manually.

AWS EKS (Elastic Kubernetes Service)

What is AWS EKS, and what are the benefits of it?

AWS EKS is a managed Kubernetes service that takes care of many operational tasks, differentiating it from traditional Kubernetes. The key distinctions include:

- Managed Control Plane:- With EKS, AWS handles the Kubernetes control plane, managing its setup, scaling, and maintenance. This reduces operational overhead, eliminating the need for users to install all Kubernetes components, as AWS manages the entire control plane.

- Automated Node Management:- EKS automates worker node management, including provisioning, scaling, and ensuring high availability.

- Integration with AWS Services:- EKS seamlessly integrates with various AWS services, simplifying tasks such as container image storage (using Amazon ECR), networking (using Amazon VPC), and security (using IAM). Manual configuration with other services is not required.

- Simplified Operations:- AWS EKS streamlines the operational aspects of running Kubernetes, enabling users to focus more on deploying and managing applications rather than dealing with Kubernetes infrastructure.

- Easier Scaling:- EKS supports auto-scaling for worker nodes, allowing the cluster to automatically adjust to changes in application demand.

What we have to manage in EKS?

While Amazon EKS (Elastic Kubernetes Service) is a managed service that abstracts away much of the underlying infrastructure and operational complexities, there are still certain aspects that users need to manage when working with EKS. Here are some of the key responsibilities of users in an EKS environment.

- Cluster Configuration:- Users need to configure and define the structure of their Kubernetes clusters. This includes specifying details such as networking configurations, instance types for worker nodes, and other cluster-related settings.

- Worker Node Groups:- Users are responsible for creating worker node groups. These groups determine the underlying compute resources (EC2 instances) that run the containerized workloads. Users can scale these node groups based on the demands of their applications.

- Application Deployments:- Users need to define and deploy their containerized applications using Kubernetes manifests (YAML files). This includes creating Deployments, Services, ConfigMaps, and other Kubernetes resources to define the desired state of their applications.

- Monitoring and Logging:- Users are responsible for setting up monitoring and logging solutions to track the health and performance of their applications and the EKS cluster. This may involve using tools like AWS CloudWatch, AWS X-Ray, or third-party monitoring solutions.

- Cost Management:- Monitoring and managing costs associated with EKS are also user responsibilities. This includes understanding the pricing model, optimizing resource usage, and potentially leveraging AWS Cost Explorer or other tools for cost analysis

- Cluster Upgrades:- Users may need to manage the process of upgrading the EKS cluster to newer Kubernetes versions. This involves testing compatibility with existing applications and scheduling controlled upgrades.

- Technical Expertise:- A technical team is required to have expertise in Kubernetes concepts, configurations, and best practices. Understanding how to effectively utilize and troubleshoot Kubernetes resources, such as pods, services, and deployments, is essential for efficient cluster management.

In simple words, it’s important to note that AWS EKS significantly simplifies the management of the Kubernetes control plane (master node); we don’t need to look at control plane components. There are features like autoscaling and load balancing available. However, we have to create the worker node group ourselves. We have to define pods, replicas, services, and deployments. Also, we have to take care of worker node patch updates

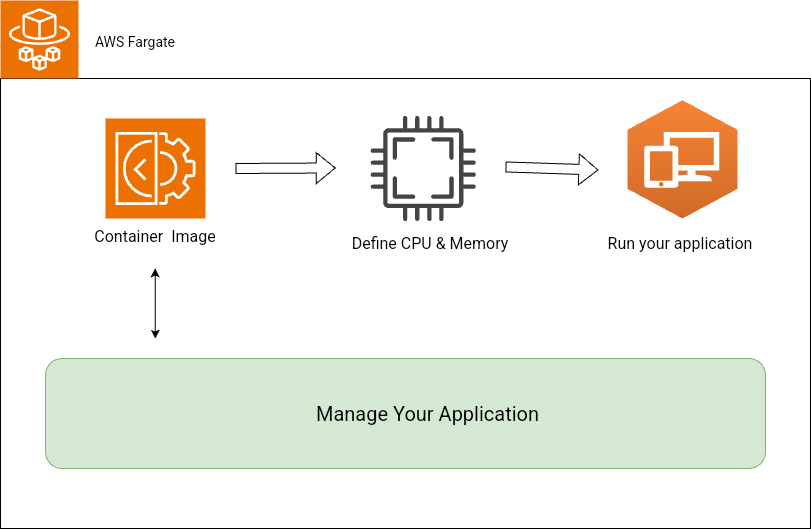

AWS Fargate

AWS Fargate is a serverless compute engine for containers provided by Amazon Web Services (AWS). It allows you to run containers without managing the underlying infrastructure. With Fargate, you don’t need to provision or manage servers; you only pay for the vCPU and memory that you use for your containers.

What is the meaning of serverless? Does it imply that applications run without servers? No, that’s not the case. Serverless means you don’t have to worry about managing servers. Instead of focusing on the infrastructure, including servers, hardware, networking, and EC2 machines, you can concentrate on writing and running your code or applications. You build your application, make changes as needed, and deploy it with just a few clicks. In the backend, AWS manages all the physical hardware for you. The cloud provider (like AWS, Azure, or Google Cloud) takes care of all server-related tasks, such as provisioning, scaling, and maintenance, allowing you to focus more on your code and less on the underlying infrastructure. It doesn’t mean there are no servers; it means you don’t have to manage them directly.

Key features of AWS Fargate include

- Serverless Deployment:- You don’t need to manage the underlying infrastructure. AWS Fargate abstracts away the servers, allowing you to focus on your applications and containers.

- Container Orchestration:- Fargate is compatible with popular container orchestration platforms like Amazon Elastic Container Service (ECS) and Kubernetes. It allows you to easily deploy, manage, and scale containerized applications.

- Resource Isolation:- Each task (a set of containers) in AWS Fargate runs in its own dedicated environment, providing resource isolation and security. In each task, you can deploy your application. For each task, you need to specify your image, CPU, and memory. You can include multiple images in each task.

- Auto Scaling:- You can configure auto-scaling policies to dynamically adjust the number of running tasks based on demand. Here is how auto-scaling works: Auto scaling in AWS Fargate can adjust the number of tasks based on specified scaling policies. When you set up auto-scaling for your Fargate service, you typically define scaling policies based on metrics such as CPU utilization or memory utilization. Based on these metrics, AWS can automatically adjust the number of tasks running in your Fargate service.

- a. Scale-Out Policy:- If the CPU or memory utilization crosses a certain threshold indicating high load, AWS can trigger a scale-out policy. This policy can instruct AWS to add more tasks (and therefore more containers) to your Fargate service to handle the increased load.

- b. Scale-In Policy:- Conversely, if the load decreases and the CPU or memory utilization drops below a specified threshold, AWS can trigger a scale-in policy. This policy can instruct AWS to reduce the number of tasks, thus removing containers to save resources.

- Integrated Networking:- Fargate seamlessly integrates with Amazon VPC (Virtual Private Cloud) for networking, allowing you to build isolated and secure environments for your containers.

Which One to Choose: AWS EKS or Fargate?

The decision is entirely based on your requirements, complexity, and the level of flexibility you desire. Here are some points to consider.

Use AWS EKS when:

- a. You have a complex application with advanced orchestration requirements.

- b. You are already familiar with Kubernetes or have specific dependencies on Kubernetes features.

- c. Your team has expertise in managing Kubernetes clusters.

- d. You want more control over the infrastructure to tailor it according to your needs.

Use AWS Fargate when:

- a. You prefer a serverless approach and want to abstract away infrastructure management.

- b. Your application is less complex, and you want a simpler way to run containers without dealing with Kubernetes complexities.

- c. You want automatic scaling and prefer paying only for the resources used by your containers without managing the underlying infrastructure.

- d. You want a more straightforward approach, just aiming to quickly deploy your application without worrying about internal factors.

nice